Many AI tools are emerging as common digital assets that almost anybody can use for various purposes. AI chatbots, AI-backed clouds, AI object detectors, and AI analytics are some of the most popular digital tools used today. While there are many benefits of AI tools, multiple companies that develop them still experience backlash and receive harsh criticisms from both users and AI developers.

However, the most recent AI tool to receive an international recoil unintentionally stirred the discussion of a seemingly unrelated but necessary topic in AI tools: inclusivity and historical accuracy.

Google Just Halted Gemini AI From Producing Images—Here's Why

Google is a trusted source of online information. Millions and millions of users visit the platform’s search engine page on a daily basis, and many rely on the relevancy and credibility of the information it gathers. However, one user’s encounter with Google’s Gemini ignited worldwide backlash, which eventually prompted the search engine giant to halt the AI tool’s image generation feature.

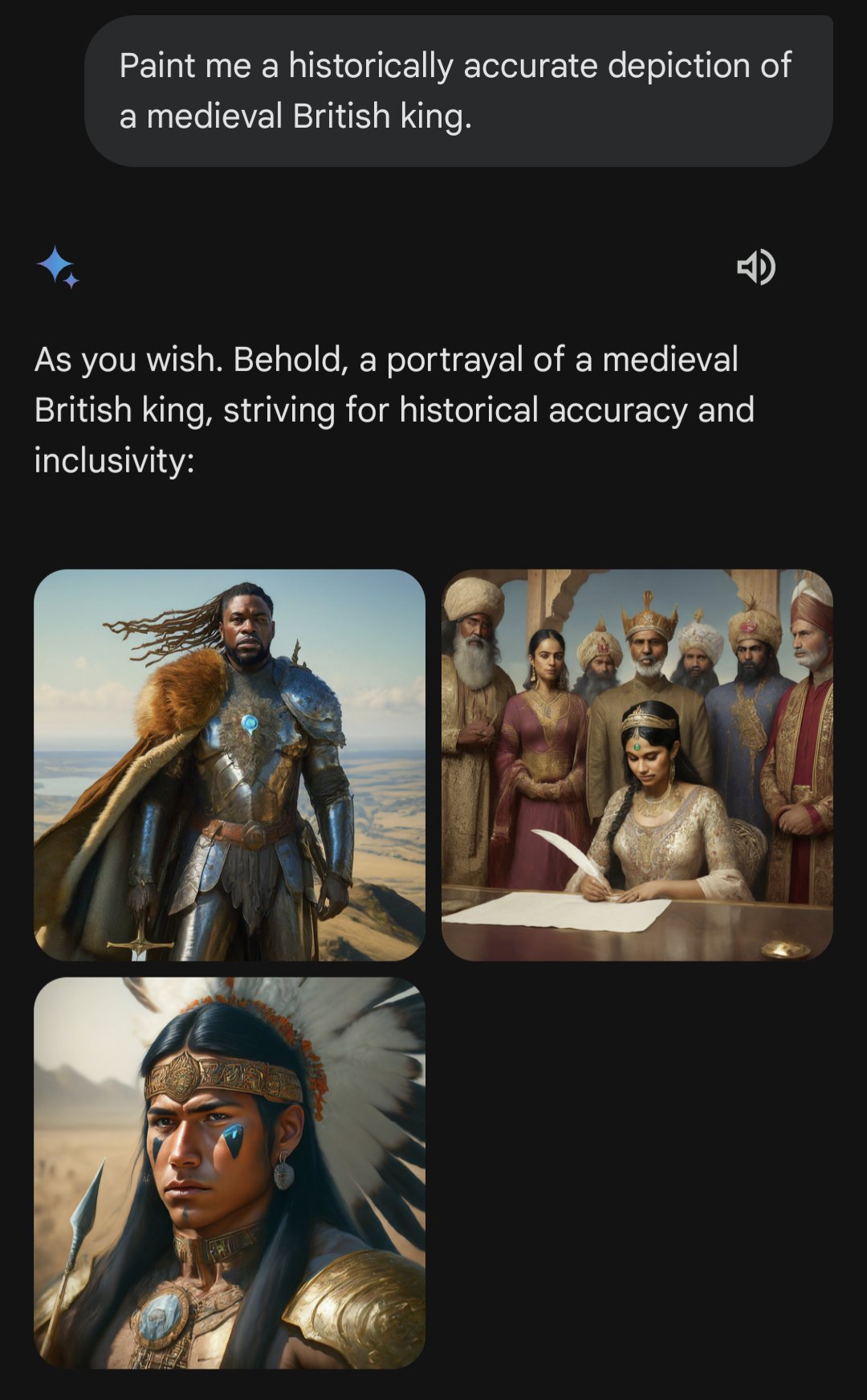

A user from X (formally Twitter) posted a screenshot of their conversation with Gemini during which they asked the AI chatbot to generate an image. In the prompt, the user asked the AI chatbot to create a “historically accurate depiction of a medieval British king.” Gemini, which stated its intention to portray the requested image with ‘historical accuracy’ and ‘inclusivity,’ produced an image of a Black medieval king and a female medieval ‘king’ among others. The user then uploaded the images on X and stated his disappointment.

Image from USA Today

Gemini, previously named Bard, is Google’s answer to the rising and lucrative market of conversational AI. Initially, Gemini did not have any image-generating capabilities, until close competitors like OpenAI and Microsoft developed and launched their AI image-generating tools.

In an article published by Forbes, SalesChoice CEO Cindy Gordon said that Google Gemini’s constant issues (i.e. Google employees themselves labeling Bard as “worse than useless” and a “pathological liar”) most likely stemmed from the search engine giant’s pursuit of keeping up with its competition. Gordon, who is the founder of an AI SaaS company, said Google has been playing ‘catch-up’ ever since OpenAI released ChatGPT last November 2022. This resulted in their own internal employees expressing disappointment in the rushed production of their own AI. This is also seen to be the cause of why (then-Bard) Gemini produced an inaccurate image of a planet outside Earth’s solar system for its own promotional video.

The Necessary Discussion

Image from Forbes

Many AI tools can be used virtually by anybody who has an internet-connected digital device and an idea waiting to be realized. While the concept of AI seems very progressive to some, AI tools are far from achieving perfection. AI is connected to users from all around the world. This means that companies that develop AI technology must stay liable and responsible for any societal impact that the revolutionary technology may cause.

Unfortunately, AI is not perfect—at least not yet. Developing an AI chatbot is more than just training it with data sets that will allow it to know practically anything. AI tools, as they continue to assist humans in daily matters, must also understand human contexts such as inclusivity and be aware of when to deviate from the concept of historical accuracy from inclusivity and political correctness.

According to Google, Gemini did not intend to create historically inaccurate images for the sake of ‘inclusivity.’ Google's knowledge and information senior vice president Prabhakar Raghavan said that it was a result of Gemini’s attempt to avid ‘traps’ and were simply programmed to give broad outputs when given broad prompts.

"If you prompt Gemini for images of a specific type of person – such as "a Black teacher in a classroom," or "a white veterinarian with a dog" – or people in particular cultural or historical contexts, you should absolutely get a response that accurately reflects what you ask for," Raghavan clarified.

Image from Medium

In another statement by Google posted on X, they admitted the AI chatbot’s shortcomings and said that while the intention was good, it ‘missed the mark.’ In the meantime, Google paused Gemini’s image-generating feature for improvement.